How AI Agents Leverage Tools to Operate in the Real World

In Agentic AI, a tool refers to any external resource—such as a system, interface, or API—that an agent can use to perform specific tasks. For instance, an AI agent might use a web search API to fetch up-to-date information or query a database to retrieve stored data.

Without access to tools, an AI model is limited to making predictions based solely on its training data. It can’t fetch real-time information, execute code, or interact with external systems. However, when equipped with tools, the range of tasks an AI can perform expands dramatically.

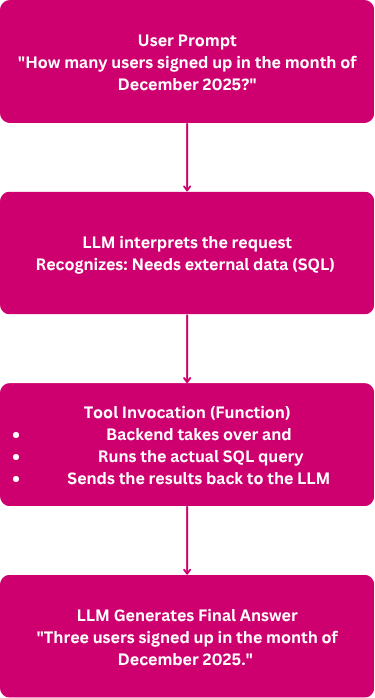

A Typical Workflow

Here’s how a typical AI tool-usage flow might work:

- The LLM receives a prompt:

"How many users signed up in the month of December 2025?" - It recognizes the nature of the task:

This is a data retrieval task requiring access to the company’s user database - It selects the appropriate tool:

The LLM decides to use a database query tool or call a backend API - The tool formulates a query, such as:

SELECT COUNT(*) FROM users WHERE signup_date BETWEEN '2025-12-01' AND '2025-12-31';

- The tool executes the query and returns the result:

For example,Three - The LLM interprets and formats the output:

"Three users signed up in the month of December 2025."

This loop—understanding the task, selecting the right tool, executing the action, and responding—enables AI agents to operate effectively in dynamic, real-world environments.

How to Implement Tooling in an Agentic AI System

The GitHub project SQL-Tool-Usage-In-Agentic-AI demonstrates how to integrate a SQL query tool into an agentic AI system. The language model is aware that an external SQL function exists and can decide to use it when it lacks the information to answer directly. However, the LLM does not execute SQL queries itself—you must implement and run the function that interacts with the database. This setup illustrates how LLMs can delegate tasks to external tools when needed.

The two key elements that make this work are:

1. Defining the Tool with Function Metadata

A JSON schema defines the tool’s name, inputs, and output, informing the LLM how to use it and serving as a contract between the agent and the external function.

tool = {

"type": "function",

"function": {

"name": "get_user_signups_by_month_year",

"description": "Return the number of users who signed up in the given month and year.",

"parameters": {

"type": "object",

"properties": {

"month": {

"type": "integer",

"description": "The month number (1-12)"

},

"year": {

"type": "integer",

"description": "The 4-digit year"

}

},

"required": ["month", "year"]

}

}

}

2. Integrating and Using the Tool in the Agent Workflow

The function sends a user prompt to an LLM with tool access enabled. If the LLM requests a tool call, it executes the corresponding function with parsed arguments. The result is appended to the conversation history, and the LLM is called again to generate a follow-up response. Finally, it returns either the direct LLM reply or the enhanced response after tool execution.

def llm_prompt(prompt, model="gpt-4o"):

# Prepare initial user message for the model

messages = [{"role": "user", "content": prompt}]

# Call the language model with optional tool usage

response = openai.chat.completions.create(

model=model,

messages=messages,

tools=[tool],

tool_choice="auto",

temperature=0.7

)

# Get the model's first response message

print("response", response)

message = response.choices[0].message

print(message)

# Check if the model requested a tool call

tool_call = message.tool_calls[0] if message.tool_calls else None

print(tool_call)

# If no tool call, return the model's direct response

if not tool_call:

return message.content

# Parse tool arguments and select the appropriate function to call

args = json.loads(tool_call.function.arguments)

function_map = {

"get_user_signups_by_month_year": get_user_signups_by_month_year

}

# Get function from map and validate

func = function_map.get(tool_call.function.name)

if not func:

return f"Unknown tool: {tool_call.function.name}"

# Call the function with args

result = func(**args)

# Append tool call result to message history

messages += [

message.model_dump(),

{

"role": "tool",

"tool_call_id": tool_call.id,

"name": tool_call.function.name,

"content": str(result)

}

]

# Call the model again with updated context to generate a follow-up response

followup = openai.chat.completions.create(

model=model,

messages=messages

)

# Return the content of the follow-up message

return followup.choices[0].message.content

Here’s what the complete flow looks like in action: